Writing and running tests with pytest

The goals of testing

Broadly speaking there are two classes of testing: functional and non-functional.

| Testing type | Goal | Automated? |

|---|---|---|

| Functional testing | ||

| – Unit testing | Ensure individual function/class works as intended | yes |

| – Integration testing | Ensure that functions/classes can work together | yes |

| – System testing | End-to-end test of a software package | partly |

| – Acceptance testing | Ensure that software meets business goals | no |

| Non-functional testing | ||

| – Performance testing | Test of speed/capacity/throughput of the software in a range of use cases | yes |

| – Security testing | Identify loopholes or security risks in the software | partly |

| – Usability testing | Ensure the user experience is to standard | no |

| – Compatibility testing | Ensure the software works on a range of platforms or with different version of dependent libraries | yes |

The different testing methods are conducted by different people and have different aims. Not all of the testing can be automated, and not all of it is relevant to all software packages. As someone who is developing code for personal use, use within a research group, or use within the astronomical community the following test modalities are relevant.

Unit testing

In this mode each function/class is tested independently with a set of known input/output/behavior. The goal here is to explore the desired behavior, capture edge cases, and ideally test every line of code within a function. Unit testing can be easily automated, and because the desired behaviors of a function are often known ahead of time, unit tests can be written before the code even exists.

Integration testing

Integration testing is a level above unit testing. Integration testing is where you test that functions/classes interact with each other as documented/desired. It is possible for code to pass unit testing but to fail integration testing. For example the individual functions may work properly, but the format or order in which data are passed/returned may be different. Integration tests can be automated. If the software development plan is detailed enough then integration tests can be written before the code exists.

System testing

System testing is Integration testing, but with integration over the full software stack. If software has a command line interface then system testing can be run as a sequence of bash commands.

Performance testing

Performance testing is an extension of benchmarking and profiling. During a performance test, the software is run and profiled and passing the test means meeting some predefined criteria. These criteria can be set in terms of:

- peak or average RAM use

- (temporary) I/O usage

- execution time

- cpu/gpu utilization

Performance testing can be automated, but the target architecture needs to be well specified in order to make useful comparisons. Whilst unit/integration/system testing typically aims to cover all aspects of a software package, performance testing may only be required for some subset of the software. For software that will have a long execution time on production/typical data, testing can be time-consuming and therefore it is often best to have a smaller data set which can be run in a shorter amount of time as a pre-amble to the longer running test case.

Compatibility testing

Compatibility testing is all about ensuring that the software will run in a number of target environments or on a set of target infrastructure. Examples could be that the software should run on:

- Python 3.6,3.7,3.8

- OSX, Windows, and Linux

- Pawsey, NCI, and OzStar

- Azure, AWS, and Google Cloud

- iPhone and Android

Compatibility testing requires testing environments that provide the given combination of software/hardware. Compatibility testing typically makes a lot of use of containers to test different environments or operating systems. Supporting a diverse range of systems can add a large overhead to the development/test cycle of a software project.

Developing tests

Ultimately tests are put in place to ensure that the actual and desired operation of your software are in agreement. The actual operation of the software is encoded in the software itself. The desired operation of the software should also be recorded for reference and the best place to do this is in the user/developer documenation (see previous lesson).

Regression testing is a strategy for developing test code which involves writing tests for each bug or failure mode that is identified. In this strategy, when a bug is identified, the first course of action is to develop a test case that will expose the bug. Once the test is in place, the code is altered until the test passes. This strategy can be very useful for preventing bugs from reoccurring, or at least identifying them when they do reoccur so that they don’t make their way into production.

Test driven development is strategy that involves distilling the software requirements into test cases before the software is fully developed. This strategy aims to prevent bugs before they occur and is useful for keeping a software project in scope. Unfortunately most software developed in an academic setting does not follow a formal development cycle that includes the collection of requirements and use cases (or user stories). However, test driven development can be incorporated into a project when new features are being developed.

How to write and run tests

In the lesson “An example development cycle for fixing a bug” we already covered one example of writing a test for the example project. We will recap and extend this example here.

For this course we are using pytest (docs) as it is both a great starting point for beginners, and also a very capable testing tool for advanced users. It should therefore serve you well until you become an ultra testing ninja.

pytest can be installed via pip. Since the end user will typically not run tests on our software, we wont include pytest in our requirements.txt file. We could make a new file called test_requirements.txt to keep track of the modules used for testing.

Writing tests

In order to use pytest we need to structure our test code in a particular way. Firstly we need a directory called tests which contain test modules named as test_<item>.py which in turn have functions called test_<thing>. The functions themselves need to do one of two things:

- return

Noneif the test was successful - raise an

AssertionErrorif the test failed

Here is an example test in the file tests/test_module.py:

def test_module_import():

try:

import skysim

except Exception as e:

raise AssertionError("Failed to import skysim")

returnRunning tests

Previously we relied on the following python-fu to run the tests and report the results:

if __name__ == "__main__":

# introspect and run all the functions starting with 'test'

for f in dir():

if f.startswith('test'):

try:

globals()[f]()

except AssertionError as e:

print("{0} FAILED with error: {1}".format(f, e))

else:

print("{0} PASSED".format(f))However, pytest gives us a much nicer interface for running and seeing the results of tests. So long as our tests are laid out in the specified directory/file/name structure then we can run all our tests as follows:

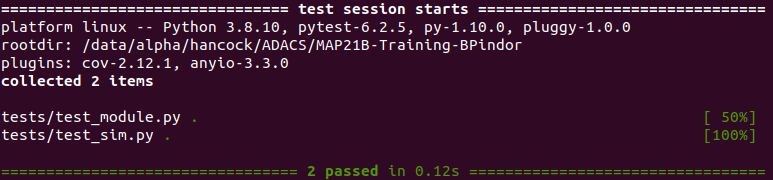

In the above you can see that pytest has navigated through the test/ directory, found all the test files, and then within each file found the test functions. Each function here is represented by a small . after the file name. In this example there is just one function per file, but we can group our testing in to different files depending on our own preference.

If I modify the skysim.sim.generate_positions function to re-introduce the bug that was previously explored then I would get the following output from pytest:

You can see that all the tests are still run, and that the tests/test_sim.py file has one test that failed as indicated by the red F. Once all the tests have been run we then get a report of the failures. For each test function that fails we get a report similar to the above.

Test metrics

Some commen metrics are

- The fraction of the tests pass,

- The fraction of lines of code were executed during testing,

- The fraction of use cases that are included in testing,

Pass fraction

pytest will report this directly both in terms of the percentage and the raw number of fail/pass. Clearly the goal here is 100%, and anything less means that there are problems with your code (or tests) that need to be resolved.

Code coverage

As well has having all your tests pass when run, another consideration is the fraction of code which is actually tested. A basic measure of this is called the testing coverage, which is the fraction of lines of code being executed during the test run. Code that is not tested can not be validated, so the coverage metric helps you to find parts of your code that are not being run during the test.

pytest can track test coverage using the pytest-cov module (install via pip).

We can run pytest and get some coverage summary output right to the terminal.

$ pytest --cov=skysim --cov-report=term

This short summary shows a summary per file and then average over the entire module. pytest will decide how many lines of code (actually, how many statements) are valid for testing, and then list them as either hit/miss while the tests are being performed.

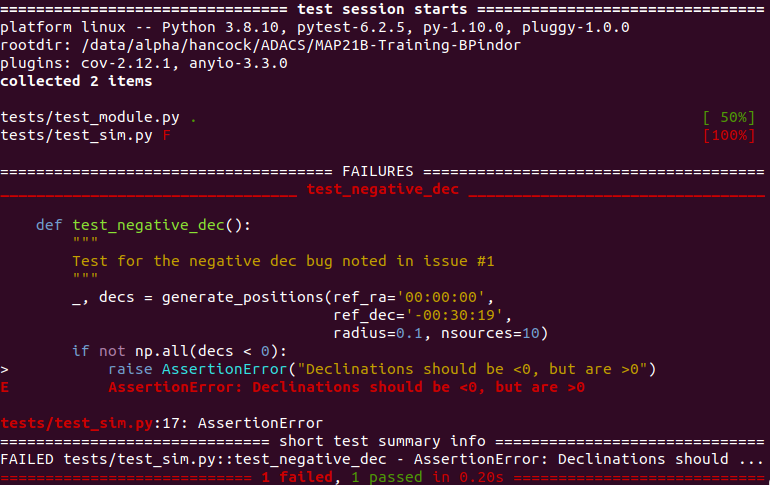

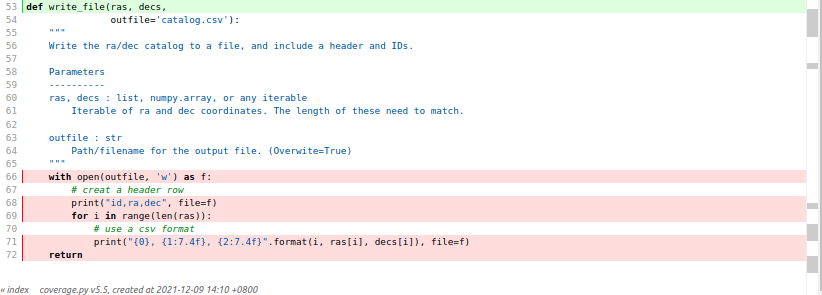

If we instead create an html output with --cov-report=html then pytest will generate a website in the htmlcov directory. If we browse this site and look at the sim.py function we can see the following.

Here the green lines indicate lines of code that were identified as being valid for testing and were executed during the tests. The red lines are those that were not executed, while the non-highlighted lines were not part of the coverage accounting. The white lines are empty lines, comments, and docstrings. We can see from the above that all of the lines in generate_positions were executed during testing, but that the body of write_file was not. Note that line 53 was executed during testing, but not any of the contents of the function. This is because the function and class definitions are done as part of the module load and syntax checking stage.

From the above report we can see that there are functions that were not tested at all, so we should probably go away and write some tests for them.

Ideally the goal of testing would be to achieve a 100% code coverage. This would required that all branches of your if/else, try/except/else/finally are eventually executed. In practice there are many branches of your code that you might include to catch rare corner cases, or rare use cases, and including all of these in your test suite may be extremely time consuming to write and/or execute. For large projects code coverage of 80% is considered good, any over 90% to be excellent.

At the end of the day, it is up to you as the developer to look through the detailed coverage report and decide if you care about certain parts of the code not being tested.

Use case coverage

As a guide to what tests should be created, or what lines of code need to be tested, it is good to consider a series of use cases or user stories. Once you have decided on these user stories, you can then break them down into steps and identify which modules/functions/classes are being used, what some example input would look like and what the expected behavior/output would be.

If you are engaging in test driven development then generating user stories is already part of the software design stage and these user stories will already be at hand and don’t require extra work. As noted previously, even if a project has been developed in an ad-hoc manner, or grown organically over time, the addition of a new feature is always an good opportunity to take on a test driven development strategy.

The mentality behind use case coverage is that even though not all the code may be tested, or it may not be tested for every possible use, you can ensure that all of the intended (or supported) uses of your software are being covered.

Summary

In this lesson we learned

- about the different types and purposes of testing,

- when to develop tests,

- how to write unit tests,

- how to run tests with pytest,

- how to report and interpret test coverage.